In order to speed-up the procedure for building an emotive/expressive talking head, an integrated software called INTERFACE was designed and implemented in Matlab©. INTERFACE simplifies and automates many of the operation needed for that purpose. A set of processing tools, focusing mainly on dynamic articulatory data physically extracted by an automatic optotracking 3D movement analyzer, was implemented in order to build up the animation engine, that is based on the Cohen-Massaro coarticulation model, and also to create the correct WAV and FAP files needed for the animation. LUCIA, our animated MPEG-4 talking face, in fact, can copy a real human by reproducing the movements of some markers positioned on his face and recorded by an optoelectonic device, or can be directly driven by an emotional XML tagged input text thus realizing a true audio visual emotive/expressive synthesis. LUCIAs voice is based on an Italian version of FESTIVAL - MBROLA packages, modified for expressive/emotive synthesis by means of an appropriate APML/VSML tagged language.

see the AISV 2004 paper (in Italian)

INTERFACE will be presented at INTERSPEECH 2005

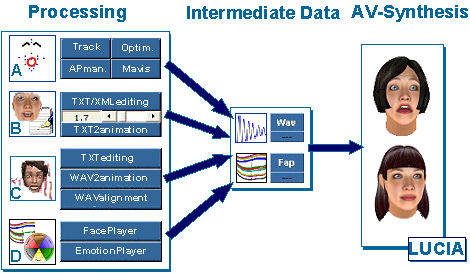

INTERFACE , whose block diagram is given in the above Figure, is an integrated software designed and implemented in Matlab© in order to simplify and automates many of the operation needed for building-up a talking head. INTERFACE is mainly focused on articulatory data collected by ELITE, a fully automatic movement analyzer for 3D kinematics data acquisition [2]. ELITE provides for 3D coordinate reconstruction, starting from 2D perspective projections, by means of a stereophotogrammetric procedure which allows a free positioning of the TV cameras. The 3D data coordinates are then used to create our lips articulatory model and to drive directly, copying human facial movement, our talking face. INTERFACE was created mainly to develop LUCIA [3] our graphic MPEG-4 [4] compatible facial animation engine (FAE). In MPEG-4 FDPs (Facial Definition Parameters) define the shape of the model while FAPs (Facial Animation Parameters), define the facial actions [5]. In our case, the model uses a pseudo-muscular approach, in which muscle contractions are obtained through the deformation of the polygonal mesh around feature points that correspond to skin muscle attachments. A particular facial action sequence is generated by deforming the face model, in its neutral state, according to the specified FAP values, indicating the magnitude of the corresponding action, for the corresponding time instant.

INTERFACE, handles four types of input data from which the corresponding MPEG-4 compliant FAP-stream could be created:

(A) Articulatory data, represented by the markers trajectories captured by ELITE; these data are processed by 4 programs:

|

Track, which defines the pattern utilized for

acquisition and implements a new 3D trajectories reconstruction procedure; Optimize, that trains the modified coarticulation model [6] utilized to move the lips of LUCIA, our current talking head under development; APmanager, that allows the definition of the articulatory parameters in relation with marker positions, and that is also a DB manager for all the files used in the optimization stages; Mavis (Multiple Articulator VISualizer, written by Mark Tiede of ATR Research Laboratories [7]) that allows different visualizations of articulatory signals; |

(B) Symbolic high-level TXT/XML text data, processed by:

|

TXT/XMLediting,

an emotional specific XML editor for emotion tagged text to be used in TTS and

Facial Animation output; TXT2animation, the main core animation tool that transforms the tagged input text into corresponding WAVand FAP files, where the first are synthesized by emotive/expressive FESTIVAL and the last, which are needed to animate MPEG-4 engines such as LUCIA, by the optimized animation model (designed by the use of Optimize); TXTediting, a simple text editor for unemotional text to be used in TTS and Facial Animation output; |

(C) WAV data, processed by:

|

WAV2animation, a simple tool that builds animations on the

basis of input wav files after automatically segmenting them by an automatic ASR

alignment system [8]; WAalignment, a simple segmentation editor to manipulate segmentation boundaries created by WAV2animation; |

(D) manual graphic low-level data , created by:

|

FacePlayer, a direct low-level manual/graphic

control of a single (or group of) FAP parameter; in other words, FacePlayer

renders LUCIAs animation while acting on MPEG-4 FAP points for a useful

immediate feedback; |

For more information please contact :

|

Istituto di Scienze e Tecnologie della Cognizione - Sezione di

Padova "Fonetica e Dialettologia" |

![]()